Multimodal Models: Architecture, workflow, use cases and development

In a world where information and communication know no bounds, the ability to comprehend and analyze data from various sources becomes a crucial skill. Enter multimodal models, the revolutionary paradigm in artificial intelligence that is transforming the way machines perceive and interact with the world. Combining the strengths of Natural Language Processing (NLP) and computer vision, multimodal models have ushered in a new era of AI, enabling machines to truly understand the complex, diverse, and unstructured data that surrounds us. Multimodality is a new cognitive AI frontier combining multiple sensory input forms to make more informed decisions. Multimodal AI came into the picture in 2022 and its possibilities are expanding ever since, with efforts to align text/NLP and vision in an embedding space to facilitate decision-making. Moreover, the global multimodal AI market is expected to grow at an annual average rate of 32.2% between 2019 and 2030 to reach US$8.4 billion, as per MIT Technology Review Insights. AI models must possess a certain level of multimodal capability to handle certain tasks, such as recognizing emotions and identifying objects in images, making multimodal systems the future of AI. According to Gartner impact radar, by 2026, multimodal AI models, which integrate text, image, audio, and video, are projected to dominate over 60% of generative AI solutions. This marks a significant rise from less than 1% in 2023, indicating a major shift from single-modality AI models.

Although in its early stages, multimodal AI has already surpassed the performance of humans in many tests. This is significant because AI is already a part of our daily lives, and such advancements have implications for various industries and sectors. Multimodal AI aims to replicate how the human brain functions, utilizing an encoder, input/output mixer, and decoder. This approach enables multimodal machine learning systems to handle tasks that involve images, text, or both. By connecting different sensory inputs with related concepts, these models can integrate multiple modalities, allowing for more comprehensive and nuanced problem-solving. Hence, the first crucial step in developing multimodal AI is aligning the internal representation of the model across all modalities.

By incorporating various forms of sensory input, including text, images, audio, and video, these systems can solve complex problems that were once difficult to tackle. As this technology continues to gain momentum, many organizations are adopting it to enhance their operations. Furthermore, the cost of developing a multimodal model is not prohibitively expensive, making it accessible to a wide range of businesses.

This article will delve deep into what a multimodal model is and how it works.

- What is a multimodal model?

- Multimodal vs. Unimodal AI models

- Key components of multimodal AI

- How does the multimodal model work?

- Architectures and algorithms used in multimodal model development

- Benefits of a multimodal model

- Multimodal AI use cases

- How to build a multimodal model?

- What does LeewayHertz’s multimodal model development service entail?

What is a multimodal model?

A multimodal model is an AI system designed to simultaneously process multiple forms of sensory input, similar to how humans experience the world. Unlike traditional unimodal AI systems, trained to perform a specific task using a single sample of data, multimodal models are trained to integrate and analyze data from various sources, including text, images, audio, and video. This approach allows for a more comprehensive and nuanced understanding of the data, as it incorporates the context and supporting information essential for making accurate predictions. The learning in a multimodal model involves combining disjointed data collected from different sensors and data inputs into a single model, resulting in more dynamic predictions than in unimodal systems. Using multiple sensors to observe the same data provides a more complete picture, leading to more intelligent insights.

To achieve this, multimodal models rely on deep learning techniques that involve complex neural networks. The model’s encoder layer transforms raw sensory input into a high-level abstract representation that can be analyzed and compared across modalities. The input/output mixer layer combines information from the various sensory inputs to generate a comprehensive representation of the data, while the decoder layer generates an output that represents the predicted result based on the input. Multimodal models change how AI systems operate by mimicking how the human brain integrates multiple forms of sensory input to understand the world. With the ability to process and analyze multiple data sources simultaneously, the multimodal model offers a more advanced and intelligent approach to problem-solving, making it a promising area for future development.

A quick note on multimodal foundation models

Multimodal foundation models are a significant evolution in AI technology, based on the concept of foundation models as discussed in seminal works like those from Stanford. These models are designed to handle and integrate multiple forms of data—such as text, images, and audio—enabling them to perform a variety of tasks across different domains.

These models inherit the properties of standard foundation models, including the capacity for broad adaptation across numerous downstream tasks. The power of these models comes from their ability to perform transfer learning at scale, which has been a key driver in their development and success.

Capabilities and functionality:

- Visual understanding models: These models are trained to understand and interpret visual data comprehensively, employing techniques like label supervision, language supervision, and self-supervision. They excel in tasks ranging from image classification to more complex challenges like segmentation and object detection.

- Visual generation models: Leveraging large-scale datasets, these models use advanced generative techniques to create high-fidelity visual content from textual descriptions. Innovations in this area include improving the alignment between generated visuals and human intent and enhancing the flexibility and precision of generated content.

- General-purpose interface: The development of general-purpose multimodal models aims to unify various specific-task models to handle both visual understanding and generation tasks more seamlessly. This approach often involves integrating capabilities from language models to enhance the multimodal system’s versatility and interactivity.

Multimodal foundation models represent diverse AI disciplines, bringing advancements from natural language processing, computer vision, and other fields to create more capable and generalist AI systems. Thus, multimodal foundation models are pivotal in pushing the boundaries of what AI can achieve, making them fundamental to future developments in AI applications.

Multimodal vs. Unimodal AI models

The multimodal and unimodal models represent two different approaches to developing artificial intelligence systems. While the unimodal model focuses on training systems to perform a single task using a single data source, the multimodal model seeks to integrate multiple data sources to analyze a given problem comprehensively. Here is a detailed comparison of the two approaches:

- Scope of data: Unimodal AI systems are designed to process a single data type, such as images, text, or audio. In contrast, multimodal AI systems are designed to integrate multiple data sources, including images, text, audio, and video.

- Complexity: Unimodal AI systems are generally less complex than multimodal AI systems since they only need to process one type of data. On the other hand, multimodal AI systems require a more complex architecture to integrate and analyze multiple data sources simultaneously.

- Context: Since unimodal AI systems focus on processing a single type of data, they lack the context and supporting information that can be crucial in making accurate predictions. Multimodal AI systems integrate data from multiple sources and can provide more context and supporting information, leading to more accurate predictions.

- Performance: While unimodal AI systems can perform well on tasks related to their specific domain, they may struggle when dealing with tasks requiring a broader context understanding. Multimodal AI systems integrate multiple data sources and can offer more comprehensive and nuanced analysis, leading to more accurate predictions.

- Data requirements: Unimodal AI systems require large amounts of data to be trained effectively since they rely on a single type of data. In contrast, multimodal AI systems can be trained with smaller amounts of data, as they integrate data from multiple sources, resulting in a more robust and adaptable system.

- Technical complexity: Multimodal AI systems require a more complex architecture to integrate and analyze multiple sources of data simultaneously. This added complexity requires more technical expertise and resources to develop and maintain than unimodal AI systems.

Here is a comparison table between multimodal and unimodal AI

| Metric | Multimodal AI |

Unimodal AI |

| Applications | Well-suited for tasks that require an understanding of multiple types of input, such as image captioning and video captioning | Well-suited for tasks that involve a single type of input, such as sentiment analysis and speech recognition |

| Computational Resources | Typically requires more computational resources due to the increased complexity of processing multiple modalities | Generally requires less computational resources due to the simpler processing of a single modality |

| Data Input | Uses multiple types of data input (e.g. text, images, audio) | Uses a single type of data input (e.g. text only, images only) |

| Examples of AI Models | CLIP, DALL-E, METER, ALIGN, SwinBERT | BERT, ResNet, GPT-3, YOLO |

| Information Processing | Processes multiple modalities simultaneously, allowing for a richer understanding and improved accuracy | Processes a single modality, limiting the depth and accuracy of understanding |

| Natural Interaction | Enables natural interaction through multiple modes of communication, such as voice commands and gestures | Limits interaction to a single mode, such as text input or button clicks |

Key components of multimodal AI

Multimodal AI systems integrate and process various types of data through three primary components:

- Input module: Acting as the AI’s sensory organs, this module captures and preprocesses diverse data forms, such as textual content and visual inputs, ensuring they are ready for deeper analysis.

- Fusion module: Central to the AI’s operation, this module synthesizes information from multiple sources. It employs advanced algorithms to emphasize significant elements, integrating them into a unified representation that captures the essence of the combined data.

- Output module: Functioning as the AI’s communicator, this module articulates the conclusions drawn from the processed information. It delivers the AI’s final output, presenting insights or responses based on the synthesized data.

These components work in concert to enable AI systems to understand and interact with the world in a more human-like manner, leveraging diverse data types to enhance decision-making processes.

How does the multimodal model work?

Once the unimodal encoders have processed the input data, the next component of the architecture is the fusion network. The fusion network’s primary role is to combine the features extracted by the unimodal encoders from the different modalities into a single representation. This step is critical in achieving success in these models. Various fusion techniques, such as concatenation, attention mechanisms, and cross-modal interactions, have been proposed for this purpose.

Finally, the last component of the multimodal architecture is the classifier, which makes predictions based on the fused data. The classifier’s role is to classify the fused representation into a specific output category or make a prediction based on the input. The classifier is trained on the specific task and is responsible for making the final decision.

One of the benefits of multimodal architectures is their modularity which allows for flexibility in combining different modalities and adapting to new tasks and inputs. By combining information from multiple modalities, a multimodal model offers more dynamic predictions and better performance compared to unimodal AI systems. For instance, a model that can process both audio and visual data can better understand speech than a model that only processes audio data.

Now let’s discuss how a multimodal AI model and architecture work for different types of inputs with real-life examples:

Image description generation and text-to-image generation

OpenAI’s CLIP, DALL-E, and GLIDE are powerful computer programs that can help us describe images and generate images from text. They are like super-intelligent robots that can analyze pictures and words and then use that information to create something new.

CLIP utilizes separate image and text encoders, pre-trained on large datasets, to predict which images in a dataset are associated with different descriptions. What’s fascinating is that CLIP features multimodal neurons that activate when exposed to both the text description and the corresponding image, indicating a fused multimodal representation.

On the other hand, DALL-E is a massive 13 billion parameter variant of GPT-3 that generates a series of output images to match the input text and ranks them using CLIP. The generated images are remarkably accurate and detailed, pushing the limits of what was once thought possible with text-to-image generation.

GLIDE, the successor to DALL-E, still relies on CLIP to rank the generated images, but the image generation process is done using a diffusion model, allowing for even more creative and realistic images to be generated, leading to stunning results.

These models showcase the incredible potential of the multimodal model and demonstrate how it can be used to generate high-quality, coherent descriptions and images from text and images. They have set a new standard for image description generation and text-to-image generation, and it will be exciting to see what further advancements are made in this field.

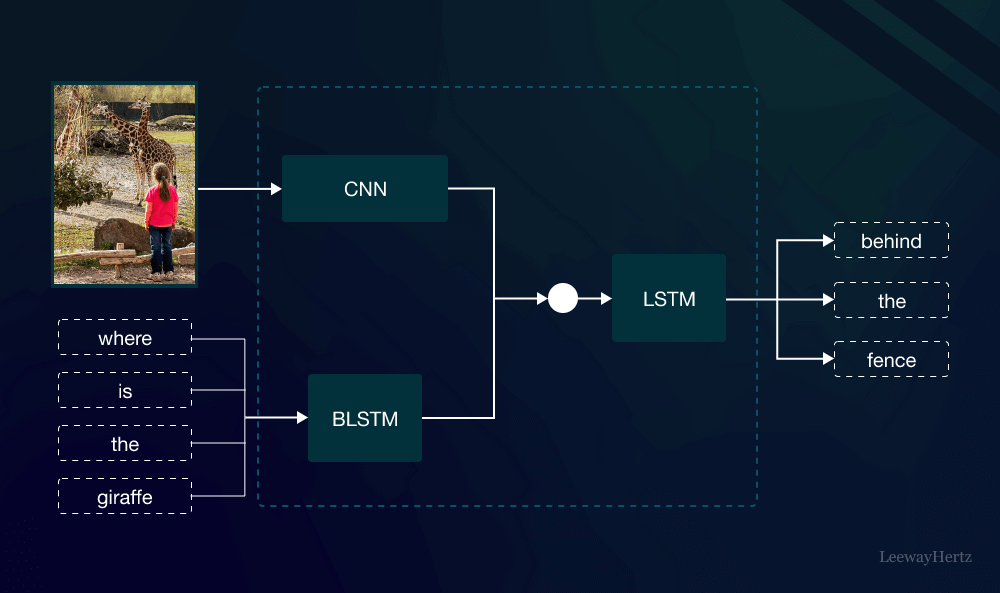

Visual question answering

Visual question answering is a challenging task requiring a model to answer a question based on an image correctly. Microsoft Research has developed some of the top-performing approaches for question answering. One such approach is METER, a general framework for training high-performing end-to-end vision-language transformers. This framework uses a variety of sub-architectures for the vision encoder, text encoder, multimodal fusion, and decoder modules.

Another approach is the Unified Vision-Language Pretrained Model (VLMo), which jointly utilizes a modular transformer network to learn a dual encoder and a fusion encoder. The network consists of blocks with modality-specific experts and a shared self-attention layer, providing significant flexibility for fine-tuning.

These models demonstrate the power of multimodal AI in combining vision and language to answer complex questions. With continued research and development, the possibilities for these models are endless.

Text-to-image and image-to-text search

Multimodal learning has important applications in web search, as demonstrated by the WebQA dataset created by experts from Microsoft and Carnegie Mellon University. In this task, a model must identify image and text-based sources that can aid in answering a query. However, multiple sources are required for most questions to arrive at the correct answer. The model must then reason using these sources to produce a natural language answer for the query.

Google has also tackled the challenge of multimodal search using their A Large-scale ImaGe and Noisy-Text Embedding model (ALIGN). ALIGN leverages the easily accessible but noisy alt-text data connected with internet images to train distinct visual (EfficientNet-L2) and text (BERT-Large) encoders. These encoders’ outputs are then fused using contrastive learning, resulting in a model with multimodal representations that can power cross-modal search without the need for further fine-tuning.

Video-language modeling

AI systems historically faced challenges with video-based tasks due to the resource-intensive nature of such tasks. However, recent advancements in video-related multimodal tasks are making significant progress. Microsoft’s Project Florence-VL is a major effort in this field, and its introduction of ClipBERT in mid-2021 marks a major breakthrough. ClipBERT uses a combination of CNN and transformer models that operate on sparsely sampled frames, optimized in an end-to-end fashion to solve popular video-language tasks. Evolutions of ClipBERT, including VIOLET and SwinBERT, utilize Masked Visual-token Modeling and Sparse Attention to achieve SotA in video question answering, retrieval and captioning. Despite their differences, all of these models share the same transformer-based architecture, combined with parallel learning modules to extract data from different modalities and unify them into a single multimodal representation.

Here is a table comparing some of the popular multimodal models

| Model Name | Modality | Key Features | Applications |

| CLIP | Vision and Text | Pre-trained image and text encoders, multimodal neurons | Image captioning, text-to-image generation |

| DALL-E | Text and Image | 13B parameter GPT-3 variant generates images from text | Text-to-image generation, image manipulation |

| GLIDE | Text and Image | Uses diffusion model for image generation, ranks images | Text-to-image generation, image manipulation |

| METER | Vision and Text | A general framework for vision-language transformers | Visual question answering |

| VLMo | Vision and Text | Modular transformer network with shared self-attention | Visual question answering |

| ALIGN | Vision and Text | Exploits noisy alt-text data to train separate encoders | Multimodal search |

| ClipBERT | Video and Text | Combination of CNN and transformer model, end-to-end | Video-language tasks: question answering, retrieval, captioning |

| VIOLET | Video and Text | Masked Visual-token Modeling, Sparse Attention | Video question answering, video retrieval |

| SwinBERT | Video and Text | Uses Swin Transformer architecture for video tasks | Video question answering, video retrieval, video captioning |

Architectures and algorithms used in multimodal model development

Multimodal models advance the capabilities of artificial intelligence, enabling systems to interpret and analyze data from various sources such as text, images, audio, and more. These models mimic human sensory and cognitive abilities by processing and integrating information from different modalities. Multimodal model development involves sophisticated architectures and algorithms. Here’s an in-depth look at the key components:

Data modalities

Data modalities refer to the various forms or types of data that multimodal machine learning models can process and analyze, each contributing unique insights and enhancing the model’s understanding of the world:

-

Text: Utilizes natural language processing (NLP) techniques like tokenization, part-of-speech tagging, and semantic analysis to interpret written or spoken language.

-

Images: Analyzed through computer vision (CV) techniques such as image classification, object detection, and scene recognition to extract visual information.

-

Audio: Processed using audio analysis techniques, including sound classification and audio event detection, to interpret speech, music, or environmental sounds.

-

Video: Combines image sequences with audio, requiring methods that manage both spatial and temporal data, critical for tasks like activity recognition or interactive media.

-

Others: Encompasses various other data types like sensor data, which provides environmental context, and time series data, offering insights into trends and patterns. These additional modalities enrich AI systems by adding more layers of data interpretation.

Each modality brings its own processing challenges and advantages, and in multimodal systems, the integration of these diverse data types enables more comprehensive analyses and robust decision-making.

Representation learning across modalities

Representation learning is crucial for transforming raw data into a structured format that AI models can effectively interpret and analyze. This process varies across different data modalities:

-

Text: Converts words into vectors that capture semantic meaning, using techniques like word embeddings (Word2Vec, GloVe) or contextual embeddings from advanced models such as BERT or GPT.

-

Images: Employs convolutional neural networks (CNNs) to extract meaningful features from raw pixel data, which are essential for tasks such as image recognition and classification.

-

Audio: Transforms sound waves into visual representations like spectrograms or extracts features using Mel-frequency cepstral coefficients (MFCCs), facilitating the classification and analysis of various sounds.

-

Video: Combines the techniques used in image and audio processing, often integrating recurrent neural networks (RNNs) or three-dimensional (3D) CNNs to capture the dynamic elements over time, crucial for understanding activities or events in videos.

Each modality requires distinct approaches for feature extraction and representation, enabling models to leverage the inherent properties of each type of data for more accurate and effective analysis.

Encoders in multimodal models

Encoders are pivotal in translating raw data from different modalities into a compatible feature space. Each encoder is specialized:

- Image encoders: Convolutional Neural Networks (CNNs) are a common choice for processing image data. They excel in capturing spatial hierarchies and patterns within images.

- Text encoders: Transformer-based models like BERT or GPT have revolutionized text encoding. They capture contextual information and nuances in language, far surpassing previous models.

- Audio encoders: Models like WaveNet and DeepSpeech translate raw audio into meaningful features, capturing rhythm, tone, and content.

Attention mechanisms in multimodal models

Attention mechanisms allow models to dynamically focus on relevant parts of the data:

- Visual attention: In tasks like image captioning, the model learns to concentrate on specific image regions relevant to the generated text.

- Sequential attention: In NLP, attention helps models focus on relevant words or phrases in a sentence or across sentences, essential for tasks like translation or summarization.

Fusion in multimodal models

Fusion strategies integrate information from various encoders, ensuring that the multimodal model capitalizes on the strengths of each modality:

- Early fusion: Integrates raw data or initial features from each modality before passing them through the model, promoting early interaction between modalities.

- Late fusion: Combines the outputs of individual modality-specific models, allowing each modality to be processed independently before integration.

- Hybrid fusion: Combines aspects of both early and late fusion, aiming to balance the integration of modalities at different stages of processing.

- Cross-modal fusion: Goes beyond simple combination, often employing attention or other mechanisms to dynamically relate features from different modalities, enhancing the model’s ability to capture inter-modal relationships.

Challenges and considerations

Effective multimodal model development involves addressing several challenges:

- Alignment and synchronization: Ensuring that the data from different modalities are properly aligned in time and context is crucial.

- Dimensionality and scale disparity: Different modalities may have vastly different feature dimensions and scales, requiring careful normalization and calibration. As multimodal AI systems incorporate more modalities, their scalability becomes a critical challenge. Scaling up involves not just handling larger volumes of data but also maintaining performance and accuracy across all integrated modalities. This can be particularly challenging when new data types or sources are added to the system, requiring continuous adjustments and optimizations to the model’s architecture.

- Contextual and semantic discrepancies: Understanding and bridging the semantic gap between modalities is essential for coherent and accurate interpretation.

-

Data volume and quality: Managing the vast amounts of varied data required by multimodal AI systems poses significant challenges in terms of storage, redundancy, and processing costs. Ensuring the quality of such data is also critical for effective AI performance.

-

Learning nuance: Teaching AI to understand nuances in human communication, such as interpreting sarcasm or emotional subtleties from phrases like “Wonderful,” remains a complex issue. Additional context from speech inflections or facial cues is often necessary to generate accurate responses.

-

Data alignment: Aligning data from different sources accurately is challenging but essential for coherent AI analysis. Ensuring that data from different modalities aligns accurately in terms of time and context is crucial yet challenging. Misalignments can lead to incorrect model interpretations and decisions, complicating the development and reliability of AI applications.

-

AI hallucinations: Multimodal systems are prone to ‘hallucinations’—generating false or misleading outputs. These errors are more difficult to detect and rectify when they involve complex data types like images or videos, potentially leading to more severe consequences.

-

Limited data sets: The availability of comprehensive and unbiased data sets is often limited, making it difficult and expensive to obtain the necessary data for training robust AI models. Issues of data completeness, integrity, and potential biases further complicate model training.

-

Missing data: Dependence on multiple data sources means that missing input from any source, such as malfunctioning audio inputs, can lead to errors or misinterpretations.

-

Decision-making complexity: Understanding and interpreting the decision-making processes of AI systems is difficult. Neural networks, once trained, can be opaque, making it challenging to identify and correct biases or errors. This complexity can render AI systems unreliable or unpredictable, potentially leading to undesirable outcomes.

Recent trends and advancements in multimodal model development

Recent advancements in multimodal model development have focused on improving the efficiency and accuracy of these models:

- Transformer models: Models like ViLBERT or VisualBERT extend the transformer architecture to multimodal data, enabling more sophisticated understanding and generation tasks.

- End-to-end learning: Efforts to develop models that learn directly from raw multimodal data, reducing the reliance on pre-trained unimodal encoders.

- Explainability and bias mitigation: Addressing the interpretability of these complex models and ensuring they do not perpetuate or amplify biases present in multimodal data.

-

Recent advancements in foundation models: Recent advancements in foundation models have significantly enhanced capabilities in video generation, text summarization, information extraction, and text-image captioning while simplifying interactions through chat or prompts. Future iterations are poised to harness a broader spectrum of multi-modal data, notably from untapped resources like YouTube, which offers a vast repository of free, paired multi-modal data. Additionally, adopting continual learning mechanisms will enable these models to dynamically update and adapt in response to new user inputs and online information, ensuring they remain current and effective.

In conclusion, multimodal model development represents a significant leap in AI’s ability to understand and interpret the world in a human-like manner. By leveraging sophisticated encoders, attention mechanisms, and fusion strategies, these models are unlocking new possibilities across various domains, from autonomous vehicles to enhanced communication interfaces. As research progresses, the integration, efficiency, and interpretability of multimodal models continue to advance, paving the way for even more innovative applications.

Benefits of a multimodal model

Contextual understanding

One of the most significant benefits of multimodal models is their ability to achieve contextual understanding, which is the ability of a system to comprehend the meaning of a sentence or phrase based on the surrounding words and concepts. In natural language processing (NLP), understanding the context of a sentence is essential for accurate language comprehension and generating appropriate responses. In the case of multimodal AI, the model can combine visual and linguistic information to gain a more comprehensive understanding of the context.

By integrating multiple modalities, multimodal models can consider both the visual and textual cues in a given context. For example, in image captioning, the model must be able to interpret the visual information presented in the image and combine it with the linguistic information in the caption to produce an accurate and meaningful description. In video captioning, the model needs to be able to understand not just the visual information but also the temporal relationships between events, sounds, and dialogue.

Another example of the benefits of contextual understanding in multimodal AI is in the field of natural language dialogue systems. Multimodal models can use visual and linguistic cues to generate more human-like conversation responses. For instance, a chatbot that understands the visual context of the conversation can generate more appropriate responses that consider not just the text of the conversation but also the images or videos being shared.

Natural interaction

Another key benefit of multimodal models is their ability to facilitate natural interaction between humans and machines. Traditional AI systems have been limited in interacting with humans naturally since they typically rely on a single mode of input, such as text or speech. However, multimodal models can combine multiple input modes, such as speech, text, and visual cues, to comprehensively understand a user’s intentions and needs.

For example, a multimodal AI system in a virtual assistant could use speech recognition to understand a user’s voice commands but also incorporate information from the user’s facial expressions and gestures to determine their level of interest or engagement. By taking these additional cues into account, the system can provide a more tailored and engaging experience for the user.

Multimodal models can also enable more natural language processing, allowing users to interact with machines in a more conversational manner. For example, a chatbot could use natural language understanding to interpret a user’s text message and incorporate visual cues from emojis or images to better understand the user’s tone and emotions, which results in a more nuanced and effective response from the chatbot.

Enhanced user experience

Multimodal systems enhance user interactions by recognizing and integrating different forms of communication, such as voice, text, and gestures. This capability makes virtual assistants and other AI interfaces more intuitive and responsive, significantly improving user engagement.

Improved accuracy

Multimodal models offer a significant advantage in terms of improved accuracy by integrating various modalities such as text, speech, images, and video. These models have the ability to capture a more comprehensive and nuanced understanding of the input data, resulting in more accurate predictions and better performance across a wide range of tasks.

Multiple modalities allow multimodal models to generate more precise and descriptive captions in tasks like image captioning. They can also enhance natural language processing tasks such as sentiment analysis by incorporating speech or facial expressions to gain more accurate insights into the speaker’s emotional state.

Additionally, multimodal models are more resilient to incomplete or noisy data, as they can fill in the gaps or rectify errors by utilizing information from multiple modalities. For instance, a multimodal model that incorporates lip movements can enhance speech recognition accuracy in noisy environments, enabling clarity where audio input may be unclear.

Improved capabilities

The ability of multimodal models to enhance the overall capabilities of AI systems is a significant advantage where these models can leverage data from multiple modalities, including text, images, audio, and video, to better understand the world and the context in which it operates, enabling AI systems to perform a broader range of tasks with greater accuracy and effectiveness.

For instance, by combining speech and facial recognition, a multimodal model could create a more robust system for identifying individuals. At the same time, analyzing both visual and audio cues would improve accuracy in differentiating between individuals with similar appearances or voices, while contextual information such as the environment or behavior would provide a better understanding of the situation, leading to more informed decisions.

Multimodal models can also enable more natural and intuitive interactions with technology, making it easier for users to interact with AI systems. By combining modalities such as voice and gesture recognition, a system can understand and respond to more complex commands and queries, leading to more efficient and effective use of technology and improved user satisfaction.

Robustness

By utilizing multiple sources of information, multimodal AI systems are less susceptible to errors or noise in individual data streams. This capability allows them to remain effective even when some data sources are compromised or uncertain, providing reliable predictions and classifications.

Multimodal AI use cases

Forward-thinking companies and organizations have taken notice of multimodal models’ incredible capabilities and are incorporating them into their digital transformation agendas. Here are some of the use cases of multimodal AI:

Automotive industry

The automotive industry has been an early adopter of multimodal AI which is leveraging this technology to enhance safety, convenience, and overall driving experience. In recent years, the automotive industry has made significant strides in integrating multimodal AI into driver assistance systems, HMI (human-machine interface) assistants, and driver monitoring systems. To explain more, driver assistance systems in modern cars are no longer limited to basic cruise control and lane detection; instead, multimodal AI systems have enabled more sophisticated systems like automatic emergency braking, adaptive cruise control, and blind spot monitoring. These systems also leverage modalities like visual recognition, radar, and ultrasonic sensors to detect and respond to different driving situations.

Multimodal AI has also improved modern vehicles’ HMI (human-machine interface). By enabling voice and gesture recognition, drivers can interact with their vehicles more intuitively and naturally. For example, drivers can use voice commands to adjust the temperature, change the music, or make a phone call without taking their hands off the steering wheel.

Furthermore, driver monitoring systems powered by multimodal AI can detect driver fatigue, drowsiness, and inattention by using multiple modalities like facial recognition, eye-tracking, and steering wheel movements to detect signs of drowsiness or distraction. If a driver is detected as inattentive or drowsy, the system can issue a warning or even take control of the vehicle to avoid accidents.

The possibilities of multimodal interaction with vehicles are endless, which implies there will soon be a time when communicating with a car through voice, image, or action will be the norm. With multimodal AI, we can look forward to a future where our vehicles are more than just machines that take us from point A to B. They will become our trusted companions, with whom we can interact naturally and intuitively, making driving safer, more comfortable, and more enjoyable.

Healthcare and pharma

Multimodal AI enables faster and more accurate diagnoses, personalized treatment plans, and better patient outcomes in the healthcare and pharmaceutical industries. With the ability to analyze multiple data modalities, including image data, symptoms, background, and patient histories, multimodal AI can help healthcare professionals make informed decisions quickly and efficiently.

In healthcare, multimodal AI can help physicians diagnose complex diseases by analyzing medical images such as MRI, CT scans, and X-rays. These modalities, when combined with clinical data and patient histories, provide a more comprehensive understanding of the disease and help physicians make accurate diagnoses. For example, in the case of cancer treatment, multimodal AI can help identify the type of cancer, its stage, and the best course of treatment. Multimodal AI can also help improve patient outcomes by providing personalized treatment plans based on a patient’s medical history, genetic data, and other health parameters, which help physicians tailor treatments to individual patients, resulting in better outcomes and reduced healthcare costs.

In the pharmaceutical industry, multimodal AI can help accelerate drug development by identifying new drug targets and predicting the efficacy of potential drug candidates. By analyzing large amounts of data from multiple sources, including clinical trials, electronic health records, and genetic data, multimodal AI can identify patterns and relationships that may not be apparent to human researchers, helping pharmaceutical companies to identify promising drug candidates and bring new treatments to market more quickly.

Media and entertainment

Multimodal AI enables personalized content recommendations, targeted advertising, and efficient remarketing in the media and entertainment industry. By analyzing multiple data modalities, including user preferences, viewing history, and behavioral data, multimodal AI can deliver tailored content and advertising experiences to each individual user.

One of the most significant ways in which multimodal AI is used in the media and entertainment industry is through recommendation systems where it can provide personalized recommendations for movies, TV shows, and other content by analyzing user data, including viewing history and preferences, helping users discover new content that they may not have otherwise found, leading to increased engagement and satisfaction.

Multimodal AI is also used to deliver personalized advertising experiences. By analyzing user data, including browsing history, search history, and social media interactions, multimodal AI can create targeted advertising campaigns that are more likely to resonate with individual users. This can lead to higher click-through rates and conversions for advertisers, while providing a more relevant and engaging experience for users.

In addition, multimodal AI is used for efficient remarketing. By analyzing user behavior and preferences, multimodal AI can create personalized messaging and promotions that are more likely to convert users who have abandoned their shopping carts or canceled subscriptions. This can help media and entertainment companies recover lost revenue while providing a better user experience.

Retail

Multimodal AI has enabled the retail industry to personalize customer experiences, improve supply chain management, and enhance product recommendations. By analyzing multiple data modalities, including customer behavior, preferences, and purchase history, multimodal AI can provide retailers with valuable insights that can be used to optimize their operations and drive revenue growth.

One of the most significant ways in which multimodal AI is used in retail is for customer profiling, in which analyzing customer data from various sources, including online behavior, in-store purchases, and social media interactions, multimodal AI creates a detailed profile of each customer, including their preferences, purchase history, and shopping habits. This information can be used to create personalized product recommendations, targeted marketing campaigns, and customized promotions that are more likely to resonate with individual customers.

Multimodal AI is also used for supply chain optimization where by analyzing data from various sources, including production, transportation, and inventory management, multimodal AI can help retailers optimize their supply chain operations, reducing costs, and improving efficiency, leading to faster delivery times, reduced waste, and improved customer satisfaction.

In addition, multimodal AI is used for personalized product recommendations. By analyzing customer data, including purchase history, browsing behavior, and preferences, multimodal AI can provide customers with personalized product recommendations, making it easier for them to discover new products and make informed purchase decisions. This can lead to increased customer loyalty and higher revenue for retailers.

Manufacturing

Multimodal AI is increasingly being used in manufacturing to improve efficiency, safety, and quality control as by integrating various input and output modes, such as voice, vision, and movement, manufacturing companies can leverage the power of multimodal AI to optimize their production processes and deliver better products to their customers.

One of the key applications of multimodal AI in manufacturing is predictive maintenance, where machine learning algorithms are used to analyze sensor data from machines and equipment, helping manufacturers to identify potential issues before they occur and take proactive measures to prevent downtime and costly repairs. This can help improve equipment reliability, increase productivity, and reduce maintenance costs. Multimodal AI can also be used in quality control by integrating vision and image recognition technologies to identify product defects and anomalies during production. By leveraging computer vision algorithms and machine learning models, manufacturers can automatically detect defects and make real-time adjustments to their production processes to minimize waste and improve product quality.

Security and surveillance

Multimodal AI is transforming security and surveillance by integrating and analyzing diverse data types to improve monitoring and response.

-

Threat detection: These systems enhance accuracy by simultaneously analyzing video feeds, audio alerts, and sensor data, quickly identifying potential threats through cross-referenced modalities. This ability to cross-reference information from different modalities enables the identification of subtle anomalies that might be missed by unimodal systems. For instance, the simultaneous analysis of visual suspicious behavior and audio cues can trigger alerts that prompt immediate security action.

-

Incident analysis: Following an event, multimodal AI efficiently reconstructs incidents by synthesizing data from multiple sources, offering a comprehensive view that aids in rapid decision-making and future prevention strategies. For example, after a security breach, AI systems can analyze video, audio, and data from access control systems to provide a comprehensive timeline and context of the events, significantly enhancing post-incident evaluations.

This technology significantly advances threat detection and incident analysis, driving superior situational awareness and security outcomes.

In addition, multimodal AI can be used to optimize supply chain management by integrating data from various sources, such as transportation systems, logistics networks, and inventory management systems, helping manufacturers improve their production planning and scheduling, reduce lead times, and improve overall supply chain efficiency.

How to build a multimodal model?

Take an image and add some text, you have got a meme. In this example, we will describe the basic steps to develop and train a multimodal model to detect a certain type of meme. To do this, we need to execute the undermentioned steps.

Step 1: Import libraries

Begin by importing essential data science libraries necessary for data loading and manipulation. Additionally, deep learning libraries like PyTorch will facilitate data exploration before model development. For tasks involving both vision and language data—core components of memes—it’s crucial to utilize tools from the PyTorch ecosystem, such as torchvision for computer vision tasks and fastText for language data processing. These tools simplify model-building by providing access to popular datasets, pre-trained model architectures, and data transformation capabilities.

Step 2: Loading and exploring the data

Begin this step by conducting a preliminary analysis of your dataset. Assess the distribution of image sizes, image quality, and text length to understand the variability within your data. This exploration will help you identify any preprocessing needs to standardize the dataset before further processing.

The initial data handling involves loading image data, requiring transformations to standardize and preprocess the inputs for model training. Employ torchvision’s transformation capabilities to resize images and convert them into tensors, allowing for uniform input size and facilitating easier data manipulation and visualization. This step is crucial for preparing the dataset for efficient learning and accuracy in the model training phase.

After standard transformations, consider implementing image augmentation techniques such as rotations and flips to improve model generalization. For text, explore data augmentation strategies like synonym replacement or random insertion to enhance the robustness of your text processing.

Step 3: Building a multimodal model

After understanding the data processing steps, the next stage is to build the model. Multimodal machine learning projects typically focus on three main aspects:

- Dataset handling: It is crucial to manage the data efficiently. This involves loading, transforming, and preparing the data for the model. For instance, images and textual data are processed separately using the PyTorch Dataset class, which allows for handling different data types distinctly. The data, structured as dictionaries containing keys for “id”, “image”, “text”, and “label”, is loaded and balanced using a DataFrame.

- Multimodal model architecture: The architecture must integrate multiple types of data. A technique known as mid-level concat fusion is used, where features extracted from both images and text are concatenated. This concatenated output is then used as input for further classification.

- Training logic: Effectively combining dataset handling and multimodal architecture is essential for training. The training process involves defining how the dataset is accessed and samples are returned, which is crucial for the model’s effective training.

In practice, a model architecture called LanguageAndVisionConcat is employed. This module performs mid-level fusion by concatenating the features from image and language modules, which are then fed into a fully connected layer for classification. This design allows for easy adjustments and integration of different modules, making it adaptable to various types of input data. The fusion process is straightforward yet effective for tasks requiring multimodal data understanding, such as meme detection.

Step 4: Training the multimodal model

Before commencing training, outline a strategy for hyperparameter optimization. Utilize techniques such as grid search or random search to find the optimal settings for learning rates, batch sizes, and other model-specific parameters. This step is crucial for maximizing model performance.

Finally, establish a training loop:

- Define the loss function and select an optimizer to adjust model weights based on training feedback.

- Configure data loaders to handle batch processing, allowing for scalable and efficient training.

- Train the model over multiple epochs, iterating through the dataset in batches and applying backpropagation to refine model parameters based on the computed loss.

- Evaluate the model regularly against a validation set to monitor its performance and make adjustments as needed to improve accuracy and effectiveness.

Upon completion of training, perform a detailed analysis of the model’s performance. Generate confusion matrices and precision-recall curves to identify where the model excels or fails, particularly focusing on misclassifications. This analysis will guide further refinement and offer insights into model behavior.

This approach details the basic structure and key components of developing a multimodal AI system capable of analyzing and classifying memes based on visual and textual data.

What does LeewayHertz’s multimodal model development service entail?

LeewayHertz is at the forefront of technological innovation, offering a comprehensive suite of services covering the development and deployment of advanced multimodal models. By harnessing the power of AI and machine learning, LeewayHertz provides end-to-end development of solutions that cater to the unique needs of businesses. The services offered include, but are not limited to:

Consultation and strategy development

- Expert guidance to identify and align business objectives with the capabilities of multimodal AI technology.

- Strategic planning to ensure seamless integration of multimodal models into existing systems and workflows.

Data collection and preprocessing:

- Robust mechanisms for collecting, cleaning, and preprocessing data from diverse sources, ensuring high-quality datasets for model training.

- Specialized techniques to handle and synchronize data from different modalities, including text, images, audio, and more.

Custom model development

- Design and development of custom multimodal models tailored to specific industry needs and use cases.

- Utilization of state-of-the-art architectures, such as transformers and neural networks, to process and integrate information from various data sources.

Model training and validation

- Advanced training techniques to ensure that models are accurate, efficient, and robust.

- Rigorous validation processes to assess the performance of the models across diverse scenarios and datasets.

Attention mechanism and fusion strategy implementation

- Implementation of sophisticated attention mechanisms to enhance the model’s focus on relevant features from different modalities.

- Expertise in various fusion techniques, including early, late, and hybrid fusion, to effectively combine and leverage the strengths of each modality.

Integration and deployment

- Seamless integration of multimodal models into clients’ IT infrastructure, ensuring smooth and efficient operation.

- Deployment solutions that scale, catering to the needs of businesses of all sizes, from startups to large enterprises.

Continuous monitoring and optimization

- Ongoing monitoring of model performance to ensure sustained accuracy and efficiency.

- Continuous optimization of models based on new data, feedback, and evolving business requirements.

Compliance and ethical standards

- Adherence to the highest standards of data privacy and security, ensuring that all solutions are compliant with relevant regulations.

- Commitment to ethical AI practices, ensuring that models are transparent, fair, and unbiased.

Support and maintenance

- Comprehensive support and maintenance services to address any issues promptly and ensure the longevity and relevance of the deployed solutions.

By partnering with LeewayHertz, clients can harness the transformative potential of multimodal models, driving innovation and gaining a competitive edge in their respective industries. With a focus on excellence, innovation, and client satisfaction, LeewayHertz is dedicated to delivering solutions that are not just technologically advanced but also strategically aligned with business goals, ensuring tangible value and impactful outcomes.

Endnote

The emergence of multimodal AI and the release of GPT-4 mark a significant turning point in the field of AI, enabling us to process and integrate inputs from multiple modalities. Imagine a world where machines and technology can understand and respond to human input more naturally and intuitively. With the advent of multimodal AI, this world is possible and quickly becoming a reality. From healthcare and education to entertainment and gaming, the possibilities of multimodal AI applications are limitless.

Furthermore, the impact of multimodal AI on the future of work and productivity cannot be overstated. With its ability to streamline processes and optimize operations, businesses will be able to work more efficiently and effectively, achieving increased profitability and growth in the process. The potential of this technology is immense, and it is incumbent upon us to fully embrace and leverage it for the betterment of society. We have a timely opportunity to seize the potential of this technology and shape a brighter future for ourselves and the future generations.

Transform how you interact with technology and machines with our intuitive multimodal AI development service. Contact our AI experts for your development needs and stay ahead of the curve!

Start a conversation by filling the form

All information will be kept confidential.