Understanding Generative AI: Models, applications, training and evaluation

In a world where creative minds constantly seek inspiration, a unique collaboration is emerging between content creators and a technological force called generative AI. This fusion of human ingenuity and the computational power of algorithms is revolutionizing the creative landscape, pushing boundaries, and opening up new realms of possibility.

Imagine a writer, staring at a blank page, struggling with creative stagnation. Enter ChatGPT—a powerful generative AI tool with remarkable text-generation capabilities. With a simple click, this digital assistant springs to life and the writer’s quest for inspiration is met with a wealth of ideas—rich characters, intricate plot twists, and engaging narratives.

This dynamic partnership between creators and machines marks a turning point for content creation. Empowered by generative AI, creators can break free from creative and artistic limitations, enabling the boundaries between creator and creation to blur.

Generative AI, driven by AI algorithms and advanced neural networks, empowers machines to go beyond traditional rule-based programming and engage in autonomous, creative decision-making. By leveraging vast amounts of data and the power of machine learning, generative AI algorithms can generate new content, simulate human-like behavior, and even compose music, write code, and create stunning visual art.

Hence, the implications of generative AI extend far beyond the realm of artistic expression. This technology in quickly impacting diverse industries and sectors, from healthcare and finance to manufacturing and entertainment. For instance, in healthcare, generative AI used to assist in drug discovery by simulating the effects of different compounds, potentially accelerating the development of life-saving medications. In finance, it can analyze market trends and generate predictive models to aid in investment decisions. Moreover, in manufacturing, generative AI can optimize designs, improve efficiency, and drive innovation. Marketing and media too feel the impact of generative AI. According to reports, venture capital firms have invested more than $1.7 billion in generative AI solutions over the last three years, with the most funding going to AI-enabled drug discovery and software coding.

Generative AI opens the door to a world where possibilities with regard to digital content creation are boundless. In this article, we explore all vital aspects of generative AI, from its types and applications to its architectural components and future trends, and analyze how this technology might alter how content-based tasks are performed in the future.

- What is generative AI?

- What is a generative AI model? Understanding its various components

- Significance of generative AI models in various fields

- Types of generative AI models

- How do generative AI models work? The step-by-step process

- Training generative AI models: Best practices and techniques

- Evaluating generative AI models: Metrics and tools

- Applications of generative AI models across industries

- The future of generative AI: Trends and opportunities

What is generative AI?

Generative AI refers to a branch of artificial intelligence that focuses on enabling machines to generate new and original content. Unlike traditional AI systems that follow predefined rules and patterns, generative AI leverages advanced algorithms and neural networks to autonomously produce outputs that mimic human creativity and decision-making.

Generative AI models are designed to learn from large datasets and capture the underlying patterns and structures within the data. These models can then generate new content, such as images, text, music, or even videos, that closely resemble the examples they were trained on. By analyzing the data and understanding its inherent characteristics, generative AI algorithms can generate outputs that exhibit similar patterns, styles, and semantic coherence.

The power of generative AI lies in its ability to go beyond simple replication and mimicry. It can create novel and unique content that hasn’t been explicitly programmed into the system. This opens up exciting possibilities for various applications, including art, design, storytelling, virtual reality, and more.

Generative AI models are typically built using advanced neural networks, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). GANs consist of a generator network that creates new instances and a discriminator network that tries to distinguish between the generated instances and real ones. Through an iterative training process, the generator learns to produce increasingly realistic outputs that can deceive the discriminator. VAEs, on the other hand, focus on learning the underlying distribution of the training data, enabling them to generate new samples by sampling from this learned distribution.

Generative AI has the potential to impact various industries and domains. It can assist in creative tasks, automate content generation, enhance virtual environments, aid in drug discovery, optimize designs, and even enable interactive and personalized user experiences.

What is a generative AI model? Understanding its various components

The term “generative AI” is a broader concept that encompasses the entire field of artificial intelligence focused on generating new content or data. It refers to the broader research, techniques, and methodologies involved in developing AI systems that can create new and original output. A generative AI model, on the other hand, refers to a specific implementation or architecture designed to perform generative tasks. It is a type of artificial intelligence model that learns from existing data and generates new output that is similar to the training data it was exposed to. Generative AI models are used in various fields, including image generation, text generation, music composition, and more.

Further, when it comes to the components that constitute generative AI models, it’s important to note that not all models share the same set of components. The specific components of a generative AI model can vary depending on the architecture and purpose of the model. Different types of generative AI models may employ various components or variations of them. Here are a few examples of generative AI models and their unique components:

- Variational Autoencoders (VAEs): VAEs consist of an encoder network, a decoder network, and a latent space. The encoder maps the input data to a latent space representation, while the decoder generates new outputs from the latent space.

- Generative Adversarial Networks (GANs): GANs comprise two main components: a generator and a discriminator. The generator generates new samples, such as images, while the discriminator evaluates the generated samples and distinguishes them from real ones.

- Transformers: Transformers are widely used in natural language processing tasks. They consist of encoder and decoder layers that enable the model to generate sequences of text or translate between different languages.

- Autoencoders: Autoencoders consist of an encoder and a decoder. The encoder compresses the input data into a latent representation, and the decoder reconstructs the original data from the latent space. Variations of autoencoders, such as denoising autoencoders and variational autoencoders, introduce additional components to enhance the generative capabilities.

It’s important to note that the types and design of components in a generative AI model depend on the specific requirements of the generative AI task and the desired output. Different models may prioritize different aspects, such as image generation, text generation, or music composition, leading to variations in the components they employ.

Significance of generative AI models in various fields

Generative AI has a profound impact on numerous professions and industries, spanning art, entertainment, healthcare, and more. These models possess the ability to automate mundane tasks, deliver personalized experiences, and tackle complex problems. Let’s explore some of the fields where generative AI is making a substantial difference.

- Art and design: Generative AI plays a significant role in art and design by assisting in idea generation, enabling creative exploration, automating repetitive tasks, and fostering collaborative creation. It enhances user experiences through personalized content and augments artistic skills by learning from and working with artists. Generative AI powers various artistic tools and applications, creating interactive installations and real-time procedural graphics.

- Medicine and healthcare: Generative AI models have made an impact in the healthcare sector too. They play a pivotal role in diagnosing illnesses, predicting treatment outcomes, customizing medications, and processing medical images. Healthcare professionals can achieve improved patient outcomes through precise and effective treatment techniques. Moreover, these models automate operational processes, resulting in time and cost savings. By enabling individualized and efficient treatments, generative AI models have the potential to completely transform the healthcare landscape.

- Natural Language Processing (NLP): Generative AI models have a profound impact on natural language processing (NLP). They possess the capability to generate language that closely resembles human speech, which finds applications in chatbots, virtual assistants, and content production software. These models excel in language modeling, sentiment analysis, and text summarization. Organizations leverage generative AI models to automate customer service, enhance content creation efficiency, and analyze vast volumes of textual data. By facilitating effective human-like communication and bolstering language comprehension, generative AI models are poised to revolutionize the field of NLP.

- Music and creative composition: Generative AI has simplified music composition by providing automated tools for generating melodies, harmonies, and entire musical compositions. It can assist musicians in exploring new styles, experimenting with arrangements, and creating unique soundscapes.

- Gaming and virtual reality: Generative AI plays a crucial role in creating immersive gaming experiences and virtual worlds. It can generate realistic environments, non-player characters (NPCs) with lifelike behavior, and dynamic storytelling elements. Generative AI enables game developers to create interactive and engaging gameplay, enhancing the overall gaming experience.

- Fashion and design: In the fashion industry, generative AI is used to create unique clothing designs, patterns, and textures. It helps designers explore innovative combinations, optimize fabric usage, and personalize fashion recommendations for customers. Generative AI brings efficiency, creativity, and customization to the world of fashion.

- Robotics and automation: Generative AI is instrumental in advancing robotics and automation. It enables robots to learn and adapt to new environments, perform complex tasks, and interact with humans more naturally. Generative AI-powered robots can enhance manufacturing processes, logistics, and even assist in healthcare settings.

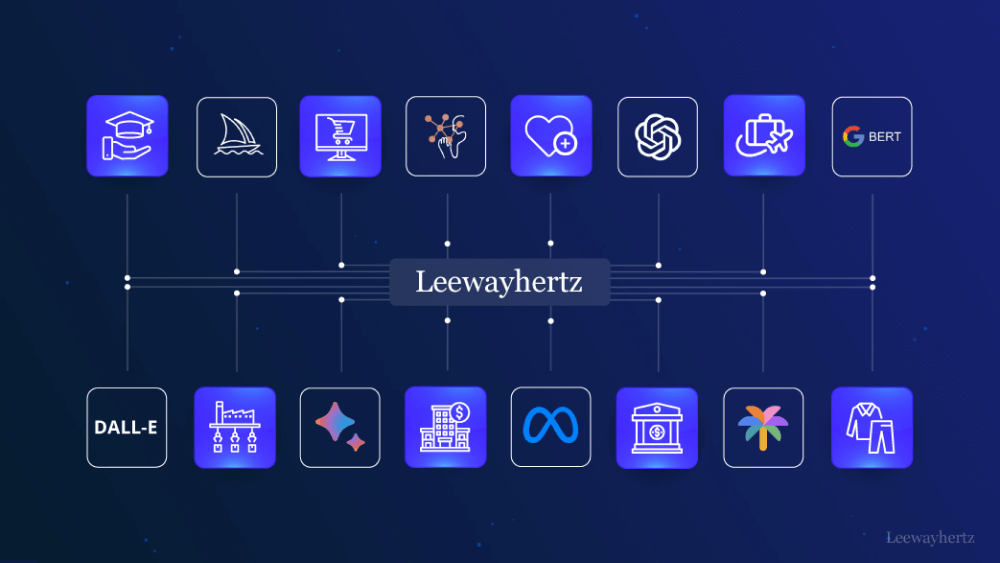

Launch your project with LeewayHertz

Harness the power of our GenAI solutions and services to optimize your workflows and elevate the performance of your customer-facing systems.

Types of generative AI models

There are many generative AI models, each with unique approaches and applications. Some common generative AI models are:

Generative Adversarial Network (GAN) – GAN stands for Generative Adversarial Network, a type of deep learning model used to generate new data similar to the training data. GANs have been utilized effectively for a number of applications, such as text generation, music composition, and picture synthesis. GANs consist of two neural networks: a generator and a discriminator that work together to improve the model’s outputs. The generator network generates new data or content resembling the source data, while the discriminator network differentiates between the source and generated data to find out what is closer to the original data. GANs are commonly used in image and video generation tasks, where they have shown impressive results in generating realistic images, creating animations, and even generating synthetic human faces. They are also being used in other areas, such as natural language processing, music generation, and fashion design.

Transformer-based models – Transformer-based models are primarily used for natural language processing tasks, such as language translation, text generation, and summarization. The Transformer model uses a self-attention mechanism to simultaneously attend to all words in the input sequence, allowing it to capture long-range dependencies and context better than traditional NLP models. One of the most common uses of the Transformer model for generative AI is in language translation. With its ability to capture complex linguistic patterns and nuances, the Transformer model is a valuable tool for generating high-quality text in various contexts.

Variational Autoencoder (VAE) – Variational Autoencoder (VAE) models are generative deep learning models used for unsupervised learning. They combine autoencoder and probabilistic modeling concepts to learn the underlying structure and distribution of a dataset. The encoder maps input data to a lower-dimensional latent space, while the decoder reconstructs the data from the latent space. VAEs optimize two objectives: reconstruction loss and regularization loss. They can generate new data samples by sampling from the learned latent space distribution. VAEs find applications in image and text generation, as well as data compression. They are a powerful framework for unsupervised learning, representation learning, and generative modeling.

Autoregressive models – Autoregressive models are generative AI models that use probability distribution to generate new data. They produce the new content by generating one element at a time and conditioning the previous elements to bring the entire dataset. These models frequently produce text, audio, or picture sequences. For instance, a language model may be trained to forecast the likelihood of each word in a phrase based on the words that came before it. The model would begin with an initial word or collection of words and then use its predictions to produce the following words one at a time. Recurrent Neural Networks (RNNs), which are artificial neural networks, can be used to create autoregressive models. Autoregressive models are popular in natural language processing and speech recognition tasks. They are also used in image and video generation, where the model generates a new image or video frame based on the previous frames.

Boltzmann machines – The Boltzmann Machine is a generative unsupervised model that relies on learning probability distribution from a unique dataset and using that distribution to draw conclusions about unexplored data. Boltzmann machines consist of a set of binary units that are connected through weighted connections. Boltzmann machines are generative models because they can generate new data samples by sampling from their learned probability distribution. This makes them useful for various applications, such as image and speech recognition, anomaly detection, and recommendation systems.

Flow-based models – Flow-based generative models are powerful and are used for generating high-quality, realistic data samples. Because of their capacity for producing high-quality content, handling huge datasets, and carrying out effective inference, these models have grown in prominence recently. Flow-based models provide many benefits compared to other generative AI model types. Large datasets with high dimensional input may be handled, high-quality samples can be produced without the requirement for adversarial training, and efficient inference can be carried out by simply computing the probability density function. However, they may not be as adaptable as other models for simulating complicated distributions, and they can be computationally expensive to train, particularly for complex datasets.

How generative AI models work? The step-by-step process

Generative AI models work by analyzing the patterns and data from a large dataset and using that knowledge to generate new content. The process can be broken down into several steps.

- Data gathering: The first step in creating a generative AI model is to collect a large dataset of examples that your generative model will use to learn from. These examples could be anything like images, audio, text, or any other dataset form that the model intends to produce.

- Preprocessing: Once the data has been gathered, it must be preprocessed before being fed into the generative AI model. To do this, the data must be cleaned, made free of errors, and put into a structure that the model can comprehend.

- Training: The generative AI model must now be trained on the preprocessed data. The model learns how to create new content based on these patterns by using machine learning algorithms to examine the patterns and relationships in the data during training.

- Validation: The model must be verified once it has been trained to make sure it is producing high-quality information. The model is tested on a set of unique and unused sample data, and its performance and accuracy are evaluated.

- Generation: When the model has been trained and verified, it may be utilized to produce new content. To do this, a collection of input parameters or data is provided to the model. It then applies its learned patterns and rules to produce new content comparable to the data it was trained on.

- Refinement: Human specialists may polish or improve the generated content. This may entail choosing the best results from the generative AI model or making modest tweaks to ensure the content meets certain criteria or requirements.

Training generative AI models: Best practices and techniques

GANs: Training a GAN model involves training two networks simultaneously: a generator and discriminator networks. The generator network generates samples, while the discriminator network distinguishes between real and generated samples. During training, the generator network is updated to improve its ability to generate realistic samples, while the discriminator network is updated to improve its ability to distinguish between real and generated samples. The training is done in an iterative process, where the generator and discriminator networks are updated alternately to reach a Nash equilibrium.

VAEs: Training a VAE model involves encoding input data into a lower-dimensional latent space using an encoder network and decoding the latent representation back into the original input space using a decoder network. The VAE is trained using a variational lower bound objective that optimizes the reconstruction loss and a KL divergence term that encourages the learned latent space to follow a standard normal distribution. The model is trained using backpropagation, stochastic gradient descent, or a related optimized algorithm.

Autoregressive models: An autoregressive model is trained by predicting the probability distribution of the subsequent item in an input sequence based on the preceding items. By reducing the negative log-likelihood of the training data, the model is trained using maximum likelihood estimation. The loss is estimated based on the discrepancy between the expected probability distribution and the actual distribution of the subsequent item during the training. The model’s parameters are updated using backpropagation across time, which is a technique that propagates the error gradient from the output of the model back through time to adjust the model’s parameters.

Boltzmann machines: Boltzmann machines are trained using the Contrastive Divergence algorithm, which involves iteratively adjusting the weights of connections between binary units in the network based on the difference between observed data and generated samples. The process involves feeding the network with training examples and maximizing the likelihood of the input data until the model converges into a stable solution.

Flow-based models: Flow-based models are trained using maximum likelihood estimation, where the model is optimized to match the probability distribution of the training data. A set of inputs is provided to the model during training, and the loss is determined by comparing the predicted probability density function to the actual probability density function of the inputs. Then, backpropagation is used to update the model’s parameters.

Evaluating generative AI models: Metrics and tools

The evaluation of generative AI models is an important part of the development process, as it helps to measure the quality and performance of the model. The specific evaluation process varies depending on the type of generative AI model being used.

GANs: GAN models are evaluated by the Frechet Inception Distance (FID) technique which gauges how similar the produced pictures are to the original images. The FID, which compares the distributions of produced and actual pictures in a feature space, is determined using a pre-trained classifier network. Better performance is indicated by a lower FID.

VAEs: Variational Autoencoder (VAE) models are evaluated with the help of reconstruction error and sample quality criteria, such as Inception Score and Fréchet Inception distance. These metrics assess how well the model can recreate the original data and provide high-quality samples. Generally, a mix of quantitative and qualitative metrics is used to evaluate VAE models.

Autoregressive models: Autoregressive models are commonly assessed based on their predictive performance in determining the next item in a sequence of data. This evaluation is often done using a metric known as perplexity. Perplexity measures the model’s ability to accurately predict the upcoming item in the sequence. It is calculated by taking the negative log-likelihood of the test data, adjusted by the number of words, to determine how well the model captures the sequence’s underlying patterns.

A lower perplexity score indicates that the model exhibits less confusion and is more effective at predicting the next item in the sequence. This measurement reflects the model’s ability to comprehend the dependencies and structure within the data. By striving for lower perplexity, autoregressive models aim to optimize their performance and improve the accuracy of their predictions, enhancing their ability to generate coherent and meaningful sequences of data.

Boltzmann machines: Evaluation of Boltzmann machines are typically done using a metric called log-likelihood, which measures the model’s ability to generate data that is similar to the training data. Log-likelihood is calculated as the log probability of the test data under the model. Higher log-likelihood indicates better performance.

Flow-based models: Flow-based generative AI models are evaluated by computing log-likelihood estimates of generated samples using methods such as importance sampling or maximum likelihood estimation, which allows for quantifying the model’s performance for its evaluation on the given dataset.

Launch your project with LeewayHertz

Harness the power of our GenAI solutions and services to optimize your workflows and elevate the performance of your customer-facing systems.

Applications of generative AI models across industries

Generative AI models have a wide range of applications across various industries, including:

- Healthcare: Generative AI models can be used in the healthcare industry to generate synthetic medical images for training diagnostic models, automate treatment processes, and generate patient data for research purposes.

- Finance: Generative AI models can be used in finance to generate synthetic financial data for risk analysis and portfolio management.

- Gaming: These models can be used in the gaming industry to create game content such as landscapes, characters, storylines,3 D photo visuals and backdrop images.

- E-commerce: They can be used in e-commerce to generate product listings, descriptions, recommendations, and display images.

- Advertising: Generative AI models can be used in advertising to generate personalized advertisements, marketing campaigns, banners and product recommendations for various genres.

- Architecture and design: Generative AI models can be used in architecture and design to generate building designs, floor plans, and landscapes.

- Manufacturing: The technology also helps the manufacturing industry to generate designs for new products, optimize production processes, and generate 3D models for prototypes.

- Natural language processing: They are used in natural language processing to generate text, speech, and dialogue for conversational AI systems, data interpretation, sentiment analysis, etc.

- Robotics: Generative AI models can be used to plan and optimize robot tasks based on various criteria such as efficiency, safety, and resource utilization. This can enable robots to make more informed decisions and perform tasks more efficiently.

The future of generative AI: Trends and opportunities

According to tech gurus and AI experts, the future of generative AI is bright, and several trends and opportunities are likely to shape this field in the future. The Global Generative AI market is expected to grow at a CAGR of 34.3% from 2022 to 2030. Generative AI tools like MidJourney, Jasper, and ChatGPT are revolutionizing the creative task-performing space, recording millions of active users daily. Here is how the future of generative AI will look:

Generative AI future trends:

Generative AI is poised to evolve significantly in the future. Here are some ways in which it is likely to progress:

- Enhanced realism: Generative AI models will continue to advance in generating content with higher levels of realism and fidelity. Through improved training techniques, larger datasets, and more powerful computational resources, AI-generated images, videos, and audio will become increasingly indistinguishable from their real counterparts.

- Cross-domain creativity: Generative AI will explore the ability to generate content across different domains and art forms. This includes generating artwork in various styles, creating music from visual input, or even generating 3D models from textual descriptions. The ability to bridge different creative domains will lead to innovative and cross-disciplinary artistic expressions.

- Improved control and guidance: Future generative AI models will likely provide users with more control and guidance over the generated content. Users will have the ability to fine-tune and steer the AI models to align with their creative vision or specific requirements. This will empower artists, designers, and content creators to use AI as a tool to augment their own creativity and achieve desired outcomes.

- Ethical and responsible AI: As generative AI becomes more prevalent, there will be an increased emphasis on addressing ethical considerations. Future developments will focus on developing frameworks and guidelines to ensure fairness, transparency, and accountability in generative AI. This includes mitigating biases, addressing potential misuse, and enabling user control over generated content.

- Integration with other technologies: Generative AI will integrate with other emerging technologies to unlock new possibilities. This includes combining AI with virtual reality, augmented reality, or mixed reality to create immersive and interactive experiences. Integration with robotics and automation will also enable AI-generated content to be applied in physical spaces and real-world applications.

- Continual learning and adaptive generation: Future generative AI models are expected to possess the ability to continuously learn and adapt to changing environments. This trend involves developing models that can incrementally update their knowledge, learn from new data, and adapt their generation capabilities over time. Continual learning enables generative AI to stay relevant, incorporate new trends, and refine its output based on evolving user preferences.

- Explainable and Interpretable generative models: There is a growing demand for generative AI models that can provide explanations and insights into their decision-making processes. Explainable and interpretable generative models aim to provide users with a clear understanding of how the model generates content and the factors that influence its output. This trend promotes transparency, trust, and enables users to have more control over the generated content.

- Hybrid approaches and model fusion: The future of generative AI might involve combining different techniques and models to create hybrid approaches. This trend explores the fusion of generative models with other AI methods such as reinforcement learning, unsupervised learning, or meta-learning. Hybrid approaches aim to leverage the strengths of different models and enhance the overall generative capabilities, leading to more sophisticated and versatile AI systems.

- Real-time content generation: The demand for real-time and interactive generative AI experiences is expected to increase. Future trends focus on developing models that can generate content on the fly, allowing users to interact with and influence the generation process in real time. This opens up possibilities for dynamic storytelling, interactive art installations, personalized virtual environments, and responsive AI-generated content.

Generative AI future opportunities:

- Personalized and interactive experiences: Generative AI offers exciting prospects for personalized and interactive experiences. By leveraging user data and preferences, AI models can generate customized content, recommendations, and interfaces tailored to individual users. This opens avenues for highly engaging and immersive user experiences in areas such as entertainment, gaming, advertising, and e-commerce.

- Creative collaboration and augmentation: Generative AI will continue to evolve as a collaborative partner for human creators. Future models will facilitate seamless collaboration between AI and humans, allowing for co-creation and idea generation. AI will assist in generating initial concepts, exploring variations, and offering suggestions, while human creators provide the final artistic direction and subjective judgment.

- Data synthesis and augmentation: Generative AI can be employed to synthesize new data samples that follow the patterns and characteristics of existing data. This is particularly useful in scenarios where the availability of labeled data is limited. AI models can generate synthetic data to augment training sets, improve model performance, and address issues like data imbalance or scarcity.

- Generative AI for scientific research and simulations: Generative AI has significant potential in scientific research and simulations. AI models can generate synthetic data to simulate complex phenomena, predict outcomes, and explore hypothetical scenarios. This can accelerate scientific discovery, optimize experiments, and aid in decision-making processes in fields such as physics, chemistry, biology, and environmental sciences.

These trends and opportunities reflect the ongoing evolution and advancement of generative AI, encompassing aspects such as ethics, continual learning, explainability, hybrid approaches, and real-time interactivity. Embracing these trends and opportunities will shape the future landscape of generative AI and unlock new possibilities for creative expression, problem-solving, and human-AI collaboration.

Endnote

Generative AI stands as a testament to the potential of human ingenuity combined with advanced machine intelligence. It has profoundly impacted fields like art, design, and creative writing, offering new avenues for exploration and innovation. From generating stunning visual artworks and composing captivating music to writing programming codes and in-depth articles, generative AI has showcased its ability to push the boundaries of what is possible within the digital content creation space.

However, this technology is not a replacement for human creativity but a powerful tool that amplifies and expands our creative capabilities. It is, rather, a collaborator, a source of inspiration, and a catalyst for creators across industries.

As we continue to embrace generative AI, it is crucial to remain mindful of ethical considerations and responsible practices. Transparency, fairness, and accountability must be at the forefront of our development and deployment of generative AI systems to ensure that they benefit society as a whole.

Looking to the future, generative AI holds immense promise. Advancements in technology, such as meta-learning, unsupervised learning, and reinforcement learning, will push the boundaries even further. The potential for enhanced realism, increased interactivity, and cross-domain creativity is also awe-inspiring.

The possibilities are boundless in this dynamic landscape, where human imagination converges with machine intelligence. By leveraging generative AI responsibly, we can unlock new dimensions of creativity, create immersive experiences, and shape a future where the collaboration between humans and AI drives unprecedented innovation.

Ready to leverage the potential of generative AI? Build a robust generative AI solution today! Contact LeewayHertz’s generative AI developers for your consultancy and development needs.

Start a conversation by filling the form

All information will be kept confidential.