Build an LLM-powered application using LangChain: A comprehensive step-by-step guide

In the ever-evolving AI landscape, language models have taken center stage, redefining how we interact with machines. With ChatGPT gaining widespread recognition and tech giants like Google coming up with their own ChatGPT-like solutions, language models, especially LLMs, have become a major talking point in the tech space. LLMs represent a significant leap forward in AI’s ability to understand, interpret and generate human language. These models are trained on vast amounts of text data, enabling them to grasp intricate linguistic patterns and semantic nuances. With unprecedented language processing capabilities, they enable users to generate high-quality content with remarkable accuracy and efficiency. Applications based on LLMs excel in tasks like text generation, sentiment analysis, language translation and conversational interfaces.

LangChain, a framework built around LLMs, opens up a world full of possibilities in natural language processing, enabling the creation of various applications, including chatbots and question-answering tools.

This article provides a comprehensive overview of LangChain, covering its conceptual basics, use cases, and the best practices to be followed while building LLM-based applications. Whether you are an experienced developer looking to integrate a language model into your project or a novice interested in exploring the capabilities of LLMs, this guide is designed to help you get started.

- Introduction to LangChain and LLM-powered applications

- LangChain: Its components and working

- Different types of models that are used in LangChain

- Setting up a LangChain project: Building LLM-powered applications

- LangChain’s applications & use cases

- Best practices for building LLM-powered applications with LangChain

Introduction to LangChain and LLM-powered applications

LangChain is an advanced framework that allows developers to create language model-powered applications. It provides a set of tools, components, and interfaces that make building LLM-based applications easier. With LangChain, managing interactions with language models, chaining together various components, and integrating resources like APIs and databases is a breeze. The platform includes a set of APIs that can be integrated into applications, allowing developers to add language processing capabilities without having to start from scratch. Hence, LangChain simplifies and streamlines the process of developing LLM-powered apps, making it appropriate for developers of all skill levels.

Chatbots, virtual assistants, language translation tools and sentiment analysis tools are all examples of LLM-powered apps. Developers utilize LangChain to build custom language model-based apps tailored to specific use cases.

As natural language processing becomes more advanced and widely used, the possible applications for this technology could become endless.

LangChain: Its components and working

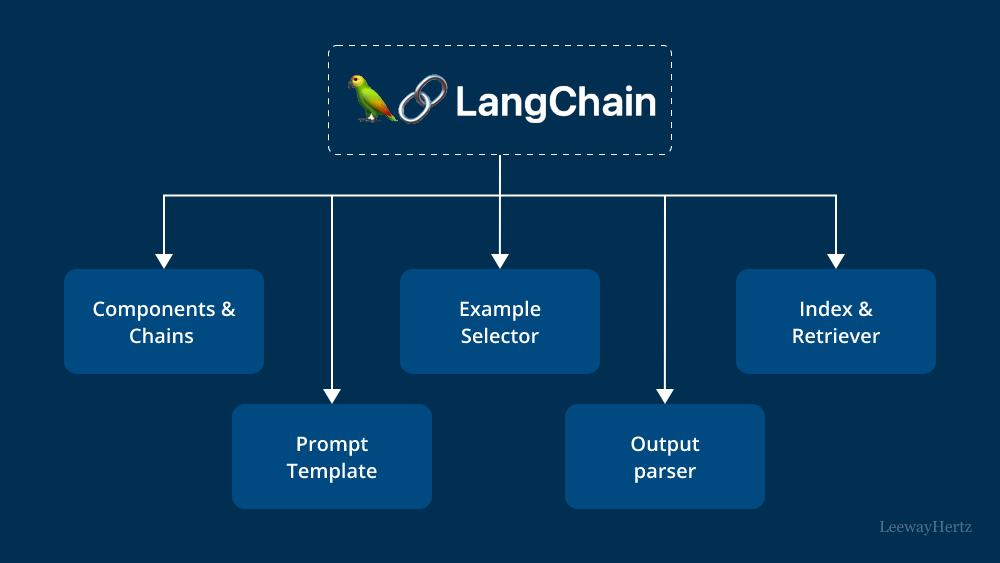

One of the unique aspects of LangChain is its focus on flexibility and modularity. By breaking down the natural language processing pipeline into individual components, developers can easily mix and match these building blocks to create custom workflows that meet their specific needs. This makes LangChain a highly adaptable framework that can be used to build conversational AI applications for a wide range of use cases and industries.

LangChain’s key features include components and chains, prompt templates and values, example selectors, output parsers, indexes and retrievers, chat message history, document loaders, text splitters, agents, and toolkits. All these enable developers to build end-to-end conversational AI applications that can deliver personalized and engaging user experiences.

- Components and chains: In LangChain, a “component” refers to a block of code or a module that performs a specific function within the natural language processing pipeline. Components can be combined into “chains” to create custom workflows for specific use cases. For example, a chain for a customer service chatbot might include components for sentiment analysis, intent recognition, and response generation.

- Prompt templates and values: Prompt templates are predefined prompts that can be reused across multiple chains. Values can be inserted into prompt templates to make them more dynamic and adaptable to specific use cases. For example, a prompt template might ask the user for their name, and a value could be inserted into the template to personalize the response. Prompt templates are useful when you need to generate prompts based on dynamic resources.

from langchain.llms import OpenAI

from langchain import PromptTemplate

llm = OpenAI(model_name="text-davinci-003", openai_api_key="YourAPIKey") # Notice "location" below, that is a placeholder for another value later

template = """ I really want to travel to {location}. What should I do there? Respond in one short sentence """

prompt = PromptTemplate(

input_variables=["location"],

template=template,

)

final_prompt = prompt.format(location="Rome")

print(f"Final Prompt: {final_prompt}")

print("-----------")

print(f"LLM Output: {llm(final_prompt)}")

In this example, the final prompt is generated by replacing the {location} placeholder with “Rome.” The AI then responds with a short sentence: “When in Rome, don’t miss a visit to the iconic Colosseum.”

Final Prompt: I really want to travel to Rome. What should I do there? Respond in one short sentence ----------- LLM Output: Visit the Colosseum, the Pantheon, and the Trevi Fountain for a taste of Rome's ancient and modern culture.

- Example selectors: Example selectors are used to identify relevant examples from the model’s training data. This helps the model to generate more accurate and relevant responses. Example selectors can be customized to prioritize specific types of examples or to exclude irrelevant ones. Example selectors allow you to tailor the AI’s response based on the user input.

from langchain.prompts.example_selector import SemanticSimilarityExampleSelector

from langchain.vectorstores import FAISS

from langchain.embeddings import OpenAIEmbeddings

from langchain.prompts import FewShotPromptTemplate, PromptTemplate

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-davinci-003", openai_api_key="YourAPIKey")

example_prompt = PromptTemplate(

input_variables=["input", "output"],

template="Example Input: {input}\nExample Output: {output}",

)

# Examples of locations that nouns are found

examples = [

{"input": "pirate", "output": "ship"},

{"input": "pilot", "output": "plane"},

{"input": "driver", "output": "car"},

{"input": "tree", "output": "ground"},

{"input": "bird", "output": "nest"},

]

# SemanticSimilarityExampleSelector will select examples that are similar to your input by semantic meaning

example_selector = SemanticSimilarityExampleSelector.from_examples(

# This is the list of examples available to select from.

examples,

# This is the embedding class used to produce embeddings which are used to measure semantic similarity.

OpenAIEmbeddings(openai_api_key=openai_api_key),

# This is the VectorStore class that is used to store the embeddings and do a similarity search over.

FAISS,

# This is the number of examples to produce.

k=2,

)

similar_prompt = FewShotPromptTemplate(

# The object that will help select examples

example_selector=example_selector,

# Your prompt

example_prompt=example_prompt,

# Customizations that will be added to the top and bottom of your prompt

prefix="Give the location an item is usually found in",

suffix="Input: {noun}\nOutput:",

# What inputs your prompt will receive

input_variables=["noun"],

)

For instance, given the noun “student,” the example selector might find “driver” and “pilot” to be the most similar examples.

# Select a noun! my_noun = "student" print(similar_prompt.format(noun=my_noun))

Output:

Give the location an item is usually found in Example Input: driver Example Output: car Example Input: pilot Example Output: plane Input: student Output:

- Output Parsers: Output parsers are used to process and filter the model’s responses. They can be used to remove unwanted content, format the output in a specific way, or add additional information to the response. Output parsers enable you to obtain structured output, such as JSON objects, from the language model’s responses.

from langchain.output_parsers import StructuredOutputParser, ResponseSchema

from langchain.prompts import ChatPromptTemplate, HumanMessagePromptTemplate

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-davinci-003", openai_api_key="YourAPIKey")

# How you would like your reponse structured. This is basically a fancy prompt template

response_schemas = [

ResponseSchema(name="bad_string", description="This a poorly formatted user input string"),

ResponseSchema(name="good_string", description="This is your response, a reformatted response"),

]

# How you would like to parse your output

output_parser = StructuredOutputParser.from_response_schemas(response_schemas)

After defining the response schema, create an output parser to read the schema and parse it. Next, create the formatting instructions using the get_format_instructions() the method from the output parser.

# See the prompt template you created for formatting format_instructions = output_parser.get_format_instructions() print(format_instructions)

Now, create a prompt template with placeholder variables for the formatting instructions and user input. This template will also include a section for the AI-generated response.

json

{ "bad_string": string // This a poorly formatted user input string "good_string": string // This is your response, a reformatted response

}

Once you’ve prepared the prompt template, send it to the language model and obtain the response. You can then parse the response using the output parser to get a structured JSON object (or a dictionary in Python).

template = ( """ You will be given a poorly formatted string from a user. Reformat it and make sure all the words are spelled correctly {format_instructions} % USER INPUT: {user_input} YOUR RESPONSE: """

)

prompt = PromptTemplate(input_variables=["user_input"], partial_variables={"format_instructions": format_instructions}, template=template)

promptValue = prompt.format(user_input="welcom to califonya!")

print(promptValue)

Output:

json\n{\n\t"bad_string": "welcom to califonya!",\n\t"good_string": "Welcome to California!"\n}\n

Launch your project with LeewayHertz

Build an LLM-powered application to streamline internal workflows and enhance customer-facing systems

- Indexes and retrievers: Indexes are databases that store information and metadata about the model’s training data. Whereas retrievers can quickly search the index for specific information or examples. This helps the model generate more accurate and relevant responses by providing them with context and relevant information.

from langchain.document_loaders import TextLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import FAISS

from langchain.embeddings import OpenAIEmbeddings

loader = TextLoader("data/PaulGrahamEssays/worked.txt")

documents = loader.load()

# Get your splitter ready

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=50)

# Split your docs into texts

texts = text_splitter.split_documents(documents)

# Get embedding engine ready

embeddings = OpenAIEmbeddings(openai_api_key="YourAPIKey")

# Embedd your texts

db = FAISS.from_documents(texts, embeddings)

# Init your retriever. Asking for just 1 document back

retriever = db.as_retriever()

By initializing the retriever with a document store, you can easily find relevant documents based on your query. In the example below, we search for documents related to building things.

VectorStoreRetriever(vectorstore=,

search_type='similarity', search_kwargs={})

The retriever will convert the query into a vector and compare it to the vectors in the document store. It will then return the most similar documents.

docs = retriever.get_relevant_documents("what types of things did the author want to build?")

print("\n\n".join([x.page_content[:200] for x in docs[:2]]))

Output:

standards; what was the point? No one else wanted one either, so off they went. That was what happened to systems work.I wanted not just to build things, but to build things that would last.In this di much of it in grad school.Computer Science is an uneasy alliance between two halves, theory and systems. The theory people prove things, and the systems people build things. I wanted to build things.

- Chat message history: Chat message history refers to the log of messages exchanged between the user and the model. This can be used to improve the model’s performance over time by providing it with feedback on its responses and identifying areas where it can improve.

from langchain.memory import ChatMessageHistory

from langchain.chat_models import ChatOpenAI

chat = ChatOpenAI(temperature=0, openai_api_key="YourAPIKey")

history = ChatMessageHistory()

history.add_ai_message("hi!")

history.add_user_message("what is the capital of france?")

After adding messages to the history, you can pass this history to the language model to generate context-aware responses:

ai_response = chat(history.messages)

Output:

AIMessage(content='The capital of France is Paris.', additional_kwargs={})

You can then add the AI-generated response to the history:

history.add_ai_message(ai_response.content)

- Document loaders: Document loaders enable you to load data from various sources in a structured format. By using document loaders, you can quickly and easily load data from various sources and make it available for use in your language models.

from langchain.document_loaders import HNLoader

loader = HNLoader("https://news.ycombinator.com/item?id=34422627")

data = loader.load()

print(f"Found {len(data)} comments")

print(f"Here's a sample:\n\n{''.join([x.page_content[:150] for x in data[:2]])}")

By using document loaders, you can quickly and easily load data from various sources and make it available for use in your language models.

Found 76 comments Here's a sample: dang 69 days ago | next [–] Related ongoing thread:GPT-3.5 and Wolfram Alpha via LangChain - https://news.ycombinator.com/item?id=344Ozzie_osman 69 days ago | prev | next [–] LangChain is awesome. For people not sure what it's doing, large language models (LLMs) are

- Text splitters: Text splitters allow you to split a document into smaller chunks. It helps the model to process the content more effectively. Text splitters are used to split vast amount of data and divide them into measurable parts.

from langchain.text_splitter import RecursiveCharacterTextSplitter

# This is a long document we can split up.

with open("data/PaulGrahamEssays/worked.txt") as f:

pg_work = f.read()

print(f"You have {len([pg_work])} document")

text_splitter = RecursiveCharacterTextSplitter(

# Set a really small chunk size, just to show.

chunk_size=150,

chunk_overlap=20,

)

texts = text_splitter.create_documents([pg_work])

print(f"You have {len(texts)} documents")

print("Preview:")

print(texts[0].page_content, "\n")

print(texts[1].page_content)

Output:

Preview: February 2021Before college the two main things I worked on, outside of school, were writing and programming. I didn't write essays. I wrote what beginning writers were supposed to write then, and probably still are: short stories. My stories were awful. They had hardly any plot,

- VectorStores: VectorStores are used to store and search the information through embeddings, they are mainly used to analyze the numerical representations of the semantic meaning of documents. The VectorStore works as the storage house of these embeddings to make them easily searchable. It acts as a database for storing the semantic meaning of your documents, allowing for quick and efficient searching based on semantic similarity.

from langchain.document_loaders import TextLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import FAISS

from langchain.embeddings import OpenAIEmbeddings

loader = TextLoader("data/PaulGrahamEssays/worked.txt")

documents = loader.load()

# Get your splitter ready

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=50)

# Split your docs into texts

texts = text_splitter.split_documents(documents)

# Get embedding engine ready

embeddings = OpenAIEmbeddings(openai_api_key="YourAPIKey")

print(f"You have {len(texts)} documents")

In this example, we create embeddings based on the split documents. The number of embeddings should match the number of documents created.

You have 78 documents

The VectorStore will store these embeddings and make them easily searchable. It acts as a database for storing the semantic meaning of your documents, allowing for quick and efficient searching based on semantic similarity.

embedding_list = embeddings.embed_documents([text.page_content for text in texts])

print(f"You have {len(embedding_list)} embeddings")

print(f"Here's a sample of one: {embedding_list[0][:3]}...")

Output:

You have 78 embeddings Here's a sample of one: [-0.0011257503647357225, -0.01111479103565216, -0.012860921211540699]...

- Agents: Agents are individual instances of LangChain that are deployed to interact with users, each agent has unique prompts, memory, and chain tailored to a specific use case or application. Agents can be deployed on various platforms, including web apps, mobile apps, and chatbots, making them accessible to a broad audience. Agents also enable the language model to dynamically decide which tools to use to best respond to a given query.

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

import json

llm = OpenAI(temperature=0, openai_api_key="YourAPIKey")

serpapi_api_key = "..."

toolkit = load_tools(["serpapi"], llm=llm, serpapi_api_key=serpapi_api_key)

agent = initialize_agent(toolkit, llm, agent="zero-shot-react-description", verbose=True, return_intermediate_steps=True)

response = agent({"input": "what was the first album of the" "band that Natalie Bergman is a part of?"})

The final response provides a clear and accurate answer to the query.

> Entering new AgentExecutor chain... I should try to find out what band Natalie Bergman is a part of. Action: Search Action Input: "Natalie Bergman band" Observation: Natalie Bergman is an American singer-songwriter. She is one half of the duo Wild Belle, along with her brother Elliot Bergman. Her debut solo album, Mercy, was released on Third Man Records on May 7, 2021. She is based in Los Angeles. Thought: I should search for the debut album of Wild Belle. Action: Search Action Input: "Wild Belle debut album" Observation: Isles Thought: I now know the final answer. Final Answer: Isles is the debut album of Wild Belle, the band that Natalie Bergman is a part of. > Finished chain.

- Toolkits: LangChain includes toolkits that provide a set of prebuilt components and chains that can be customized for specific use cases. Toolkits can help developers get started quickly and provide a solid foundation for building more complex workflows.

Different types of models that are used in LangChain

There are different types of models accessible through the LangChain framework. LangChain is designed to be modular and flexible, allowing developers to choose the right model for their specific use case.

Three primary types of models are used in LangChain:

1. Language models: LLMs are the foundation of many language model applications and are designed to take in a text string and produce a text string. They are trained on vast amounts of text data and can generate high-quality responses to natural language input.

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-ada-001", openai_api_key="YourAPIKey")

llm("What day comes after Friday?")

The model would generate the output “Saturday.”

2. Chat models: Chat models, on the other hand, have a more formal API and are designed to handle conversation history and context. They take in as input a list of chat messages and there comes another list of chat messages as output, making it easy to manage the conversational flow and keep track of user interactions.

from langchain.chat_models import ChatOpenAI from langchain.schema import HumanMessage, SystemMessage, AI Message chat = ChatOpenAI(temperature=1, openai_api_key="YourAPIKey") chat([SystemMessage(content="You are an unhelpful AI bot that makes a joke at whatever the user says"), HumanMessage(content="I would like to go to New York; how should I do this?")])

In this example, the model responds humorously and unhelpfully to the user’s query about traveling to New York, as instructed by the system message.

AI Message(content="You could try walking, but I don't recommend it unless you have a lot of time on your hands. Maybe try flapping your arms really hard and see if you can fly there?", additional_kwargs={})

3. Text embedding models: They are designed to accept the text as input and produce a list of items that represent the embeddings of the text. These embeddings can be used for a wide range of applications, including document retrieval, grouping, and similarity comparisons.

from langchain.embeddings import OpenAIEmbeddings

embeddings = OpenAIEmbeddings(openai_api_key="YourAPIKey")

text = "Hi! It's time for the beach"

text_embedding = embeddings.embed_query(text)

print( f"Your embedding is length {len(text_embedding)}")

print( f"Here's a sample: {text_embedding[:5]}...")

The generated embeddings are a one-dimensional array (or a list) of numbers that semantically represent the text’s meaning.

By selecting the right LangChain model for their use cases, developers can leverage these powerful tools to build sophisticated conversational AI applications. LangChain’s modular and flexible design makes it easy to mix and match different components and models, allowing developers to create custom workflows that meet their specific needs.

Launch your project with LeewayHertz

Build an LLM-powered application to streamline internal workflows and enhance customer-facing systems

Setting up a LangChain project: Building LLM-powered applications

The simplest approach to going further into LangChain is to begin developing actual applications, which we will accomplish in the this section. Here we have set up the Langchain project with python.

Let’s begin by making a new project folder:

mkdir langchain-app cd langchain-app

Next, create a new Python virtual environment:

python3 -m venv env

Here’s a breakdown of the command:

python3: This instructs the command to use Python 3 as its interpreter. -m venv: This value specifies that the command should utilise the built-in venv module to create virtual environments. env: This is the name of the virtual environment you want to create. In this case, the virtual environment will be named env.

A virtual environment is an isolated python environment that allows you to install project-specific packages and dependencies without interfering with your system-wide Python installation or other projects. This isolation aids in maintaining consistency and avoiding potential conflicts between project requirements.

Once the virtual environment has been created, use the following command to activate it:

source env/bin/activate

With the virtual environment activated, we can begin installing the project’s dependencies. To begin, we will use the following command to install LangChain:

pip install langchain

The output on the console should then look like the following:

Let’s continue installing the openai package:

pip install openai

This package is required in order tomake use of OpenAI’s Large Language Models (LLM) in LangChain.

To be able to access OpenAI models with LangChain, you must first obtain an API key from OpenAI. Take the following steps:

Go to the OpenAI website: https://www.openai.com/

Click on “Get Started” or “Sign in” if you already have an account.

Create an account or sign in to your existing account.

After signing in, you’ll be directed to the OpenAI Dashboard.

Navigate to the API section by clicking “API” in the left sidebar menu or by visiting: https://platform.openai.com/signup

Follow the instructions to access or sign up for the API. If you’re eligible, you’ll be provided with an API key.

The API key should look like a long alphanumeric string (e.g., “sk-12345abcdeABCDEfghijKLMNOP”).

To set the OpenAI Key for our environment you can use the command line:

export OPENAI_API_KEY="..."

Or you can include the following two lines of Python code in your script:

import os os.environ["OPENAI_API_KEY"] = "..."

Using LLMs in LangChain

LangChain provides an LLM class designed for interfacing with various language model providers, such as OpenAI, Cohere, and Hugging Face. This class provides a common interface for all LLM kinds. In this section we’ll walk you through integrating LLMs with LangChain using an OpenAI LLM wrapper and the functionalities highlighted here are applicable to all LLM types.

Import the LLM wrapper:

To start, import the desired LLM wrapper. In this example, we’ll use the OpenAI wrapper from LangChain:

from langchain.llms import OpenAI llm = OpenAI(model_name="text-ada-001", n=2, best_of=2)

Generate text:

The most basic functionality of an LLM is generating text. To do this, simply call the LLM instance and pass in a string as the prompt:

llm("Tell me a joke")

Let’s combine everything to a complete Python script in file langchain-llm-01.py:

import os

from langchain.llms import OpenAI

os.environ["OPENAI_API_KEY"] = ""

llm = OpenAI(model_name="text-ada-001", n=2, best_of=2)

result = llm("Tell me a joke")

print(result)

Let’s run this script with:

python langchain-llm-01.py

You should then be able to see the output on the console:

Generate more detailed output:

You can also call the LLM instance with a list of inputs, obtaining a more complete response that includes multiple top responses and provider-specific information:

llm_result = llm.generate(["Tell me a joke", "Tell me a poem"]*15) len(llm_result.generations)

The code provided has two main components:

llm_result = llm.generate(["Tell me a joke", "Tell me a poem"]*15)

This line of code calls the generate() method of the llm instance, which is an instance of the LangChain LLM class. The generate() method takes a list of prompts as input. In this case, the list consists of two prompts: “Tell me a joke” and “Tell me a poem”. The *15 operation repeats this list 15 times, resulting in a list with a total of 30 prompts.

When the generate() method is called with this list of prompts, the LLM generates responses for each prompt. The method returns a result object that contains the generated responses as well as additional information.

len(llm_result.generations)

This line of code retrieves the number of generations (generated responses) in the llm_result object. The generations attribute of the llm_result object is a list containing the generated responses for each input prompt. In this case, since there are 30 input prompts, the length of the llm_result.generations list will be 30, indicating that the LLM has generated 30 responses corresponding to the 30 input prompts.

Access the top responses and provider-specific information:

ou can access the generations provided by the LLM by accessing the generations array:

llm_result.generations[0]

You can also retrieve more information about the output by using property llm_output:

llm_result.llm_output

The structure available via llm_output is specific to the LLM you’re using. In case of OpenAI LLM’s it should contain information about the token usage, e.g.:

{'token_usage': {'completion_tokens': 3903,

'total_tokens': 4023,

'prompt_tokens': 120}}

This is a dictionary representation of the LLM provider-specific information contained in the llm_output attribute. In this case, it shows information about token usage:

- completion_tokens: The number of tokens generated by the LLM as responses for the input prompts. In this case, the LLM generated 3,903 tokens as completion text.

- total_tokens: The total number of tokens used during the generation process, including both the input prompts and the generated responses. In this case, the total token count is 4,023.

- prompt_tokens: The number of tokens in the input prompts. In this case, the prompts contain 120 tokens in total.

These token counts can be useful when working with LLMs, as they help you understand the token usage and costs associated with generating text using the LLM.

Let’s combine it all into a new Python script:

import os

from langchain.llms import OpenAI

os.environ["OPENAI_API_KEY"] = "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"

llm = OpenAI(model_name="text-ada-001", n=2, best_of=2)

llm_result = llm.generate(["Tell me a joke", "Tell me a poem"]*15)

print("Number of Genrations provided: ")

print(len(llm_result.generations))

print("\n")

print("Generation Index 0: ")

print(llm_result.generations[0])

print("\n")

print("Addtional information:")

print(llm_result.llm_output)

Starting the script again will lead to the following results:

Estimate token count:

Estimating the number of tokens in a piece of text is useful because models have a context length (and cost more for more tokens), so it’s important to be aware of the text length you’re passing in. By default, the tokens are estimated using tiktoken.

This means you need to install the corresponding package as well:

pip install tiktoken

You can then use the following line of code to get top token estimate:

llm.get_num_tokens("what a joke")

LangChain applications and use cases

LangChain allows developers to access a vast corpus of text data, use pre-trained models for language processing, and build robust applications that can be customized as per user’s interests. Here are some use cases and applications of LangChain:

Language translation: LangChain allows connecting a language model to other sources of data. In translation, this means that It enhances translation capabilities by integrating additional data sources and allowing customization for more powerful and differentiated translation applications.

Chatbots and virtual assistants: LangChain can be used to build chatbots and virtual assistants that can understand and respond to natural language queries. Developers can use LangChain to train models to understand different languages and use them to power chatbots and virtual assistants.

Sentiment analysis: LangChain can be used to build sentiment analysis applications that can analyze large volumes of text data and identify positive, negative, and neutral sentiment. This can be used for market research, social media monitoring, and other use cases.

Language learning: LangChain can be used to build language learning applications that can help people learn new languages. Developers can use pre-trained models to build applications that adapt to users’ comprehension level and provide them with grammar and vocabulary exercises accordingly.

Content moderation: LangChain can be used to build content moderation applications that can automatically identify and flag inappropriate or offensive content. This can be used on social media platforms, online forums, and other websites to protect users from harmful content.

Best practices for building LLM-powered applications with LangChain

While building LLM-powered applications with LangChain, there are several best practices that developers should follow to ensure that their applications are robust, scalable, and secure. Here are some of the best practices for building LLM-powered applications with LangChain:

Choose the right model: The success of an LLM-powered application depends on the accuracy and performance of the machine learning model that powers it. Developers should choose the right model for their application based on the type of data they are working with, the size of their dataset, and the specific task they are trying to accomplish.

Preprocess your data: Machine learning models require clean, structured, and labeled data to function accurately. Developers should preprocess their data before training their models to ensure that it is formatted correctly, contains no errors, and is labeled accurately.

Fine-tune your model: Pre-trained models can be used as a starting point for LLM-powered applications, but they may not be optimal for specific use cases. Developers should fine-tune their models to improve their accuracy and performance on specific tasks and datasets.

Storage systems: Storage systems are used to store data and models for LLM-powered applications. This ensures that data is accessible to all users of the application and that the application is not reliant on a centralized server.

Ensure security: Security is crucial for any application that processes user data. Developers should ensure that their LLM-powered applications are secure by encrypting sensitive data, implementing access controls, and using secure communication protocols.

Test your application: Developers should thoroughly test their LLM-powered applications before deploying them to ensure that they function as intended and are free of bugs. Testing should include unit tests, integration tests, and user acceptance testing.

Conclusion

LangChain provides developers with a powerful toolkit to build language-based applications. It serves as the structural foundation that empowers developers to create language-based applications to understand and process human language with high accuracy and efficiency. By leveraging the power of machine learning algorithms, LangChain provides a secure and transparent platform for building innovative applications that can reinvent the way we communicate and interact with technology.

This beginner’s guide provides an overview of LangChain, its core concept, use cases and how to get started with building LLM-powered applications with Python. By following the best practices outlined in this guide, developers can ensure that their applications are robust, scalable, secure and ready to provide a seamless user experience. As the field of natural language processing continues to evolve, LangChain is poised to play a significant role in the development of language-based applications in a wide range of industries, from healthcare and finance to social media and education. By empowering developers to build applications that can understand and process human language, LangChain is helping to bridge the gap between humans and technology, making it easier for people to communicate and interact with machines.

Ready to build your own LLM-powered application with LangChain? Contact LeewayHertz for your consultancy and development needs.

Start a conversation by filling the form

All information will be kept confidential.